For quite a while there has been confusion over how VMware’s Transparent Page Sharing (TPS) feature works with vSphere 4 running on Nehalem (or other modern) processors. Many people were noticing that it appeared that TPS was not actually working anymore and looked for ways to fix the problem.

In my recent post on the effects of ASLR in vSphere the comments turned into a discussion about TPS on modern processors. And there are countless posts about this issue on the VMTN forums where folks are looking for a fix. In reality nothing is broken and there is no need to fix the issue.

VMware has published a KB article that gives more information on TPS with Nehalem processors and why it appears TPS isn’t working (this affects modern AMD processors also). The short version is that TPS uses small pages (4K), and Nehalem processors utilize large pages (2MB). The ESX/ESXi host keeps track of what pages could be shared, and once memory is over-comitted it breaks the large pages into small pages and begins sharing memory.

Many people think this is a bug in ESX that needs to be fixed. This likely started because when vSphere 4 was released there was a bug around memory usage on ESX hosts with Nehalem processors. In reality the bug was that vCenter was triggering high memory usage alarms for virtual machines running in this configuration. Nothing was actually wrong but because the host was using all of the assigned memory for the VM, vCenter was incorrectly triggering the alarm. That behavior has since been fixed with a patch and is no longer an issue.

So what does this actually look like? When a VM is powered up on an ESX host with Nehalem processors, the amount of host memory in use will not drop down as the VM uses less memory or becomes idle. Those of us that have been using ESX for a long time likely found this scenario disturbing.

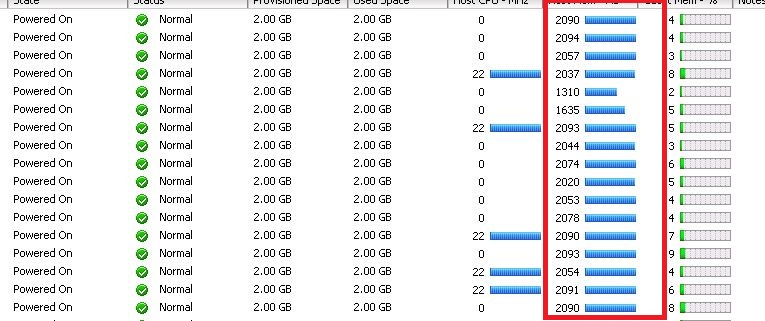

From vSphere Client (red highlighted section shows guest taking all of the 2GB assigned memory, yet memory usage in the guest is very low):

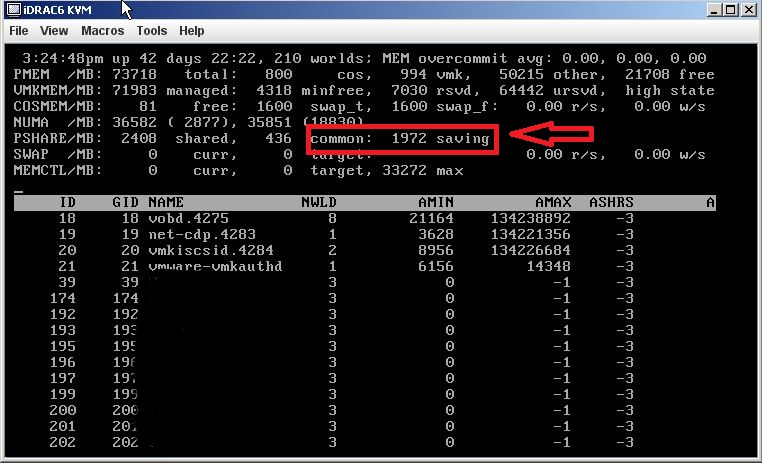

From esxtop (red highlighted section shows almost no memory being shared with page sharing):

The above screenshots show a host that is under-committed on memory and so no page sharing is occurring. If the host gets over-commited page sharing kicks in automatically by breaking up large pages into small pages. You can force the use of small pages on all guests all the time by changing the value of the advanced option Mem.AllocGuestLargePage to 0. I don’t really see any reason to do this – remember that TPS isn’t broken and what you see in the above screenshots is normal and expected.

Once host memory is over-committed (or if you use the advanced option), memory sharing kicks in and things look like they normally do when page sharing is taking place.

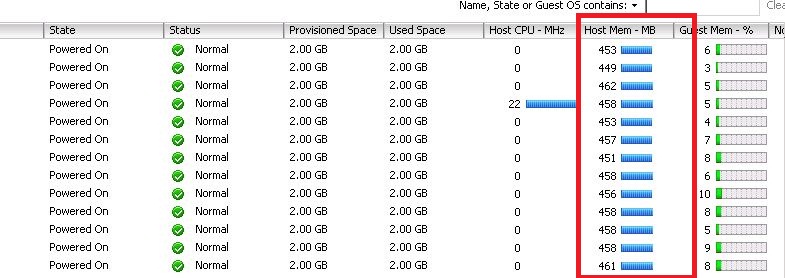

From the vSphere Client (red highlighted section shows guest taking very little of the assigned 2GB memory as page sharing has kicked in):

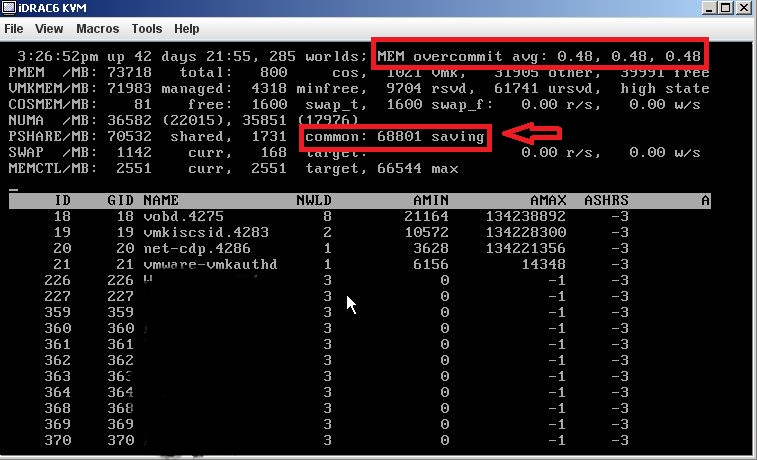

From esxtop (red highlighted sections show a large amount of shared memory and the host is over-commited on memory by 48%):

A quick note on the esxtop screenshot above – it was taken from a VDI environment where all workloads are identical so that explains the high amount of shared pages. It was also overcommitted more than normal as it was taken during host maintenance.

I hope this clears up some of the confusion around TPS on modern Intel/AMD processors. In short, don’t get hung up on the fact that TPS isn’t kicking in like it did with older processors. Nothing is broken, TPS is working as expected, and it will kick in when you actually need it.