As server and storage hardware continues to evolve, and now that Citrix has introduced Machine Creation Services (MCS) as an alternative deployment methodology to compliment Provisioning Server (PvS), architects and engineers have more flexibility than ever with regard to virtual desktop infrastructure (VDI) design. While MCS is somewhat limited, PvS provides flexibility with regard to write cache and vDisk location. There are performance, redundancy, and other tradeoffs associated with these choices, however, and it’s important to fully understand them when designing a solution.

With regard to write cache location PvS offers four options – target device RAM, target device HD, server cache, and difference disk. The implications of each are discussed here, but this article focuses on one in particular – RAM. It’s the fastest, but it’s also the most expensive and typically the most limited from a capacity perspective. Also, unlike the other options that grow the cache file as needed (up to the limit of available drive space), the size of RAM cache is fixed and when you run out bad things happen. Specifically, Citrix states that “if more different sectors are written than the size of the cache, the device will hang.” It’s important to understand exactly what that means, especially since it isn’t quite what you might think, at least not at the time of this writing. The sections below illustrate RAM cache’s seemingly unusual behavior, explain why it occurs, dig a bit deeper into specific examples, and discuss what we might see in the future.

Test Configuration

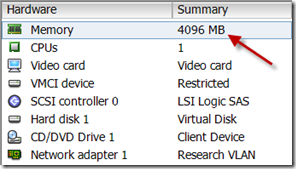

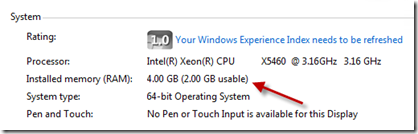

The tests below use XenDesktop 5 SP1 and Provisioning Server 5.6 SP1. The target VM is Windows 7 64-bit SP1 running on vSphere 4.1 and is configured with 4Gb RAM.

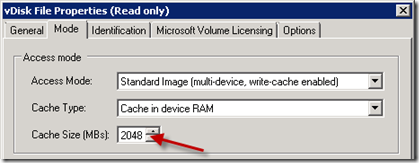

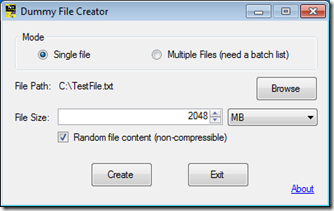

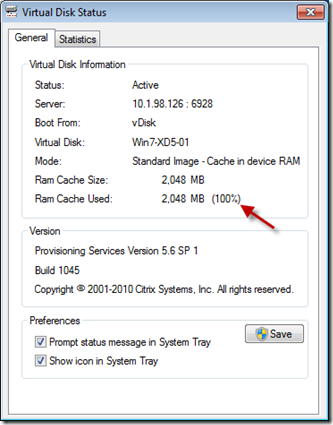

The vDisk is configured with 2Gb RAM cache.

This results in 4Gb total, 2Gb usable in Windows.

The Clown Car

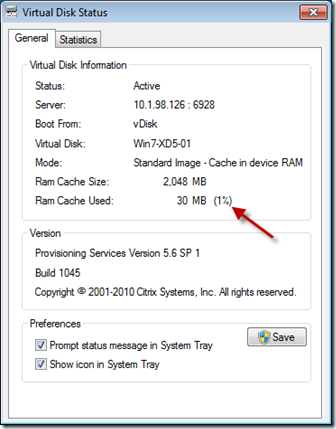

We’ll begin with a fresh VDI session. The PvS system tray applet shows 1% of the system cache is used.

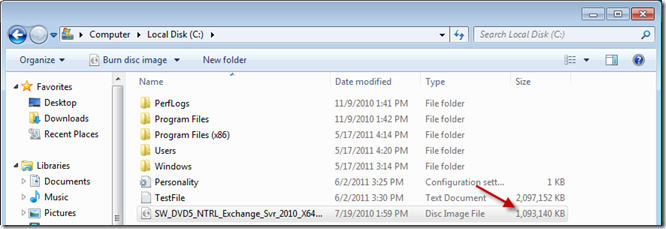

We’ll then saturate the RAM cache by generating a 2Gb test file.

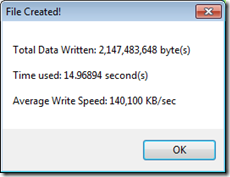

The test file is created successfully and the RAM cache is maxed at 100%.

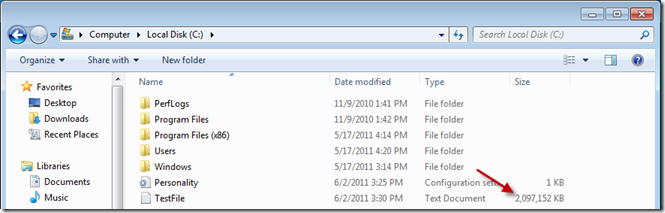

Since we started with 30Mb and wrote another 2048Mb that should be it for our session, right? Not quite. Let’s copy a 1Gb Exchange ISO from the network…

…and download a 900Mb Windows service pack from the Internet.

“Shut the front door!” you say? “Does Windows still work?” Well, this video of the Swedish Chef plays perfectly.

I’m also able to create and save content in Paint, WordPad, etc. Weird, right? Let’s take a look at why this occurs.

The Explanation (per Citrix engineering)

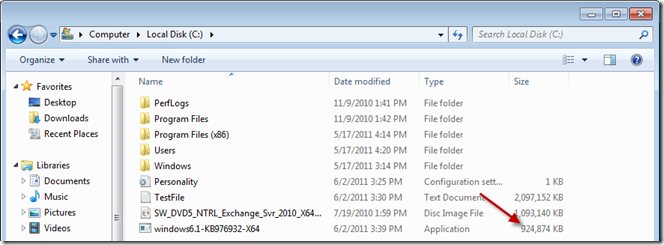

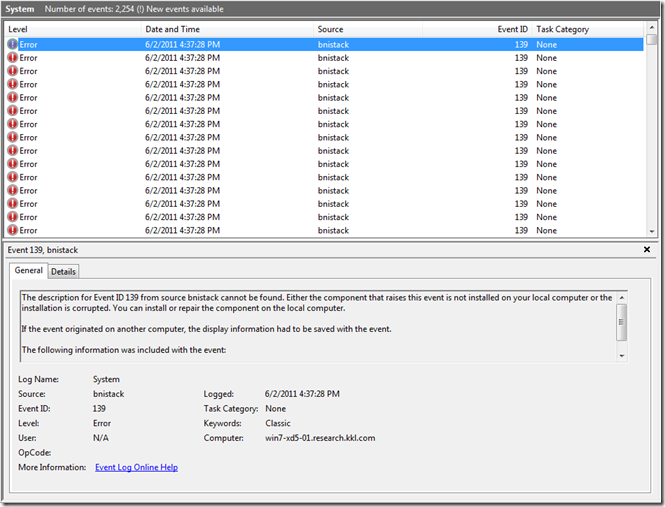

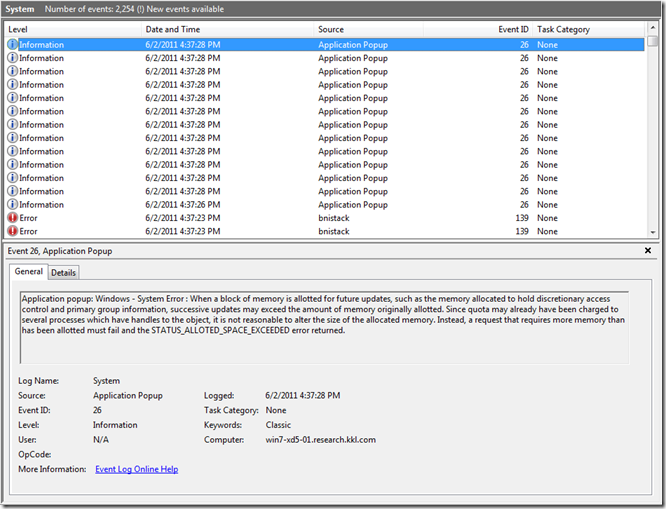

When Citrix network stack interface driver (bnistack) MIoProcessWcRamWriteTransaction detects insufficient memory it returns successful to the caller (Windows) without actually saving the data to the RAM cache, so if Windows never reads the same data back, practically unlimited data can be added (though it may be limited by other factors like available disk space – Windows will prevent you from creating a 10Gb file when you have 8Gb free on your vDisk, for example). Once the RAM cache becomes saturated errors are logged as follows:

Unfortunately by the time you attempt to access the Event Log your system is likely frozen, and if it’s a Standard image without redirected log files you’re out of luck when the frozen session is reset.

Digging Deeper

What does the explanation above really mean? Does it hold up in practice? Where does excess data go if it isn’t actually written? What happens when you attempt to access excess data? The examples below explore these questions.

Example 1

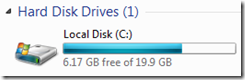

Upon logging on to a fresh VDI session and opening my test file creator, I have over 6 Gb free on the C drive…

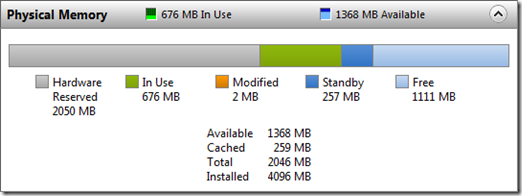

…and memory usage is as follows:

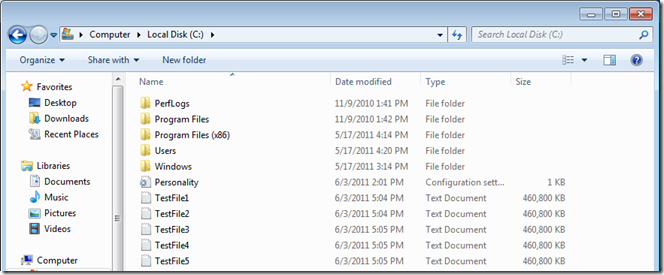

I then create five separate 450Mb test files.

As the files are created RAM cache utilization increases, memory in use remains roughly constant, standby memory (Windows system cache) increases, and free memory decreases.

| RAM Cache | Memory In Use | Standby Memory | Free Memory | |

| Start | 20 Mb (1%) | 676 Mb | 257 Mb | 1111 Mb |

| TestFile1 | 472 Mb (23%) | 684 Mb | 711 Mb | 650 Mb |

| TestFile2 | 922 Mb (45%) | 684 Mb | 1161 Mb | 204 Mb |

| TestFile3 | 1380 Mb (67%) | 649 Mb | 1385 Mb | 10 Mb |

| TestFile4 | 1831 Mb (89%) | 648 Mb | 1386 Mb | 0 Mb |

| TestFile5 | 2048 Mb (100%) | 645 Mb | 1394 Mb | 4 Mb |

| TestFilen | 2048 Mb (100%) | ~645 Mb | ~1394 Mb | ~4 Mb |

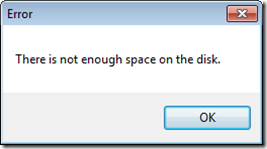

I can continue creating test files up to the limit of available hard drive space, at which point the following error is generated:

In other words, similar to “The Clown Car” above, I was able to generate test files that far exceeded the RAM cache before encountering an issue, and if I’d had a larger hard drive I would have been able to generate more.

So where did the data go? We see in the table above that as test files are created the RAM cache increases in direct proportion to test file size until no more RAM cache remains. Standby memory increases and free memory decreases in a similar manner, presumably showing that Windows is caching the most recently created data for quick access later, though this can’t really be considered “storage” for purposes of this analysis.

So what about the excess? Let’s look at another example…

Example 2

Five 450 Mb test files are created, thus saturating RAM cache. Attempting to open any of them results in an immediate system freeze and eventual reset. In addition, deleting one or more of them then attempting to open one that remains results in an immediate freeze and eventual reset. Finally, deleting all five files, creating a sixth, and attempting to open it results in an immediate freeze and eventual reset. This suggests that once the RAM cache is saturated, attempting to access any content within it freezes the system.

Example 3

Four 450 Mb test files are created and RAM cache is at 89%. Any one of them are opened. A fifth is then created, thus saturating RAM cache. We would appear to be in the same position as the previous example, but this time any of the first four files can be opened again and opening the fifth freezes the system. This suggests that opening a file before the RAM cache is saturated somehow “protects” cache contents.

Example 4

Four 450 Mb test files are created and RAM cache is at 89%. Any one of them are deleted. RAM cache remains at 89%. A fifth file is created. RAM cache remains at 89%. This suggests that deleted info isn’t reclaimed (or at least isn’t reported as reclaimed by the Virtual Disk Status applet) but is available for reuse when deleted.

Example 5

Four 450 Mb test files are created and RAM cache is at 89%. Attempting to open all 4 maxes out memory in use, the system begins paging, the page file consumes additional RAM cache, and when RAM cache becomes saturated the system freezes.

Conclusions

The examples above suggest the following:

- RAM cache can be reused, but not reclaimed, up to the point of saturation.

- RAM cache saturation will likely not be apparent to end users.

- Once the saturation point is reached, data is at risk.

- If no files in the cache were accessed prior to saturation, any attempt to access cache contents will result in a freeze.

- If any file in the cache is accessed prior to saturation, contents in the cache prior to reaching saturation appear to be “protected.”

- Page file size and use is another important consideration.

Future

Citrix engineering has acknowledged that this is a bug, and has indicated that the fix should be to crash (BSOD) when RAM cache becomes saturated. “The fix should be to crash? Really?” Yes, following the philosophy that existing user data should be protected and further corruption should be prevented.

Final Thoughts

As a best practice, a proof of concept with write cache on the server is recommended as it allows analysis of write cache size over time. Once confidence is established with regard to write cache size, cost/benefit analysis can be performed relative to write cache location.

Thanks to my colleagues Matt Liebowitz and Niraj Patel for their help with this post.