I was recently involved in a project to improve performance in a XenDesktop 4 / Provisioning Server 5.6 environment where users were reporting slow logon times and sluggish performance, and SAN statistics showed higher IOPS than we would have liked. The environment consists of two Desktop Delivery Controllers (DDCs) on Windows 2003 R2 and two Provisioning Servers (PvSs) on Windows 2008 R2, all virtual and spread across two Dell R710 vSphere 4.1 hosts that also host approximately 25 other servers, and 125 production and a handful of test/pilot Windows 7 VMs spread across four additional R710 vSphere 4.1 hosts. All hosts utilize a single EqualLogic PS6000X. Both the vDisk and write cache were located on a file cluster that had one node on each of the same two hosts as the DDCs and PvSs. The cluster’s sole purpose was to facilitate high availability in the event of a single PvS failure.

As I investigated the performance issues I reviewed quite a bit of product documentation, best practice guides, forum posts, etc. and spent quite a bit of time on the phone with Citrix technical support. Still, the issues remained. I then attended a VDI summit at Microsoft and was fortunate enough to speak to a Citrix engineer who happened to be presenting. As we took a walk down the hall I described the issues and environment, and by the time we got back he’d identified the problem and the solution. Though at first blush locating the vDisk and write cache on a highly available network share appears to make sense, doing so eliminates PvS’s use of the Windows system cache.

The system cache stores frequently accessed information in RAM but only applies to local files (or files that appear local, as is the case with an iSCSI drive). So in the current environment every time a target VM issued a read request it was sent through the network to a PvS, through the network again to the active file cluster node, through the network again to the SAN, then back to the file cluster node, back to the PvS, and back to the target VM. Granted, approximately 50% of the traffic “through the network” was through a virtual switch since each target VM had a 50% chance of utilizing the PvS on the same host as the active file cluster node, but the point remains – each request resulted in some degree of physical network traffic, OS overhead on both the PvS and file cluster node, and in read I/O on the SAN.

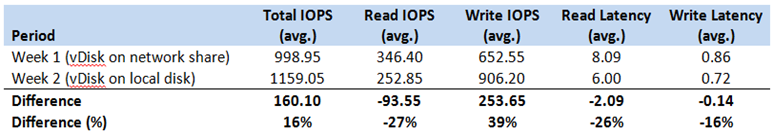

The solution was to create local storage on each PvS, relocate the vDisk from the file cluster to the local storage (now two sets of files instead of one), modifying the vDisk Store properties to point to the local storage, and defining a process to keep vDisk files in sync between the PvSs as updates are made. Also, most importantly, I increased the RAM on each PvS from 2Gb to 6Gb. Together, these changes allowed the most frequently accessed information from the vDisk to be cached in RAM on each PvS, thus reducing read IOPS, read latency, and write latency as shown below, and eliminating the trip through the file cluster node.

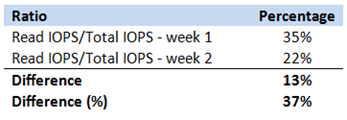

Note that read IOPS decreased 27% despite a 16% increase overall. Further analysis indicates the actual impact was higher:

Stated another way, if total IOPS were constant at 998.95 from week 1 to week 2, based on the numbers above we would expect read IOPS to be 22% of that in week 2. That’s 217.92, which is 37% less than 346.40.

It’s important to note that with approximately 25 non-VDI servers utilizing the same storage infrastructure these are certainly not isolated results – the statistics above cannot be attributed to the vDisk relocation alone. However, since the environment was fairly static for the periods immediately preceding and following this project it’s assumed that 1) non-VDI activity remained roughly constant, and 2) the 16% increase is due to the movement of several sets of vDisk files within the SAN. Obviously every environment is different in terms of equipment, topology, workload, etc. Therefore this analysis is intended to illustrate the performance gains in one particular environment, not to state that you will have the same gains in your environment.

In any event, the changes described above immediately improved VDI performance, and a few weeks later relocating write cache from the file cluster to a local disk on each VM improved performance further. Specifically, relocating the write cache left write-related SAN I/O roughly constant but eliminated the network traffic and OS overhead associated with PvS and the file cluster node. This also allowed us to decommission the file cluster nodes and reclaim the host and SAN resources they consumed. It’s worth noting that since the VDI environment shares the SAN with the production servers, the decreased read IOPS and corresponding decreases in both read and write latency provided benefits system-wide.

I found this solution interesting from a technical perspective, but equally interesting that so little information is out there on the impact of vDisk location. The Citrix support staff I spent hours on the phone apparently weren’t aware of it, and Citrix’ “Implementing a Virtual Desktop Infrastructure” course didn’t cover it (though ironically the course materials show a vDisk store with a UNC path). Also, the product documentation doesn’t cover it and the best practices guide arguably contains misleading information:

All traffic that occurs between the vDisk and the target device passes through the PVS machine regardless of where the vDisk resides. Using Windows Server 2003 file caching features can improve vDisk deployment efficiency.

A fair read is that “all” vDisk traffic “can be improved” by caching, when in fact “only” traffic to a local vDisk is affected, and it “will dramatically be improved.”

Now that I know what I’m looking for, I’ve found recommendations relative to vDisk location and system cache in a blog post, a forum post, and a handful of similar resources elsewhere, but given the significant performance impact to both VDI and other components that utilize the same storage infrastructure I’m surprised that this topic isn’t discussed more prominently in more formal documentation. That said, I hope this information is helpful to anyone planning or troubleshooting a similar environment.